The trolley problem: What would a self-driving car do?

Years ago, a trucker driving down the western slope of the Rocky Mountains lost his brakes. As his truck accelerated, he hoped to make it to the next runaway truck ramp before losing control. However, when he reached it, he saw a car parked at its base with a group of teenagers drinking beers. In a split-second decision, he veered to the left instead and went off the cliff. In the coming years, faced with the same moral dilemma, what would a self-driving truck do?

Matteo Luccio

Many similar scenarios have been discussed in the technical literature on self-driving vehicles. Most of them are variations on the “trolley problem” presented to generations of college philosophy students since it was first formulated by philosopher Philippa Foot in 1967 and adapted by Judith Jarvis Thomson in 1985. In the trolley problem, a person can choose to divert a trolley from the main track, saving five people who are working on it but killing a person on the other track who otherwise would not have been involved.

When faced with an inevitable crash, should a self-driving car slam into a wall to save the lives of three children crossing the street or, in effect, target them to save its two occupants? Most people, when polled, choose the former. When shopping for a new car, however, those same people are more likely to buy one that will make their own safety its highest priority.

Human drivers react to emergencies instinctively — motivated by neither forethought nor malice — and in real time. By contrast, the choices made by autonomous vehicles are predetermined by programmers; their control systems can potentially estimate the outcome of various options within milliseconds and take actions that factor in an extensive body of research, debate and legislation. Therefore, our judgment is harsh if those vehicles make what we deem to be the “wrong” choice.

However, there is no universal agreement as to what constitutes the “right” choice, other than the fact that people generally prefer self-driving cars to minimize the number of lost lives and to privilege people over animals and younger people over older ones. General principles such as “to minimize harm” are of little help in complex and dynamic real-life situations.

Self-driving cars, in addition to their many other benefits, will dramatically reduce traffic accidents and fatalities, because they will never be distracted, drowsy, drunk or drugged. Yet accidents will still happen, and their outcomes will be largely determined far in advance.

The mass introduction of self-driving cars onto public roads will require overcoming technical, legal and ethical challenges. As a society, we will have to agree on a uniform set of ethical codes that will guide these vehicles’ decision-making processes in emergencies. This will force us to explicitly quantify the value of human life and property, and encode it in software. These are hard and uncomfortable choices.

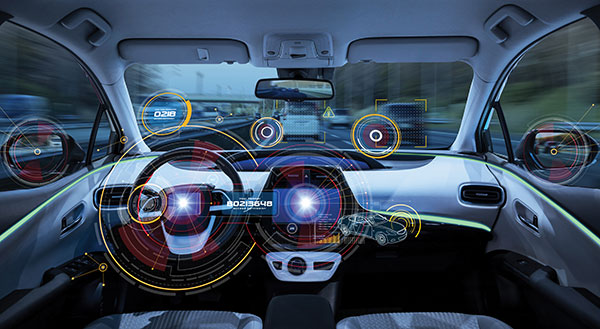

Autonomous systems, fusing data from multiple sensors, will guide these vehicles. It is up to us to decide whom they will target and whom they will spare.

Matteo Luccio | Editor-in-Chief

mluccio@northcoastmedia.net

Follow Us