Two years since the Tesla GPS hack

In June 2019, Regulus Cyber’s experts successfully spoofed the GPS-based navigation system of a Tesla Model 3 vehicle. This experiment provided an important warning for all companies using GNSS location and timing: these technologies, on which they depend, are highly vulnerable to spoofing attacks. In the two years since the experiment, companies and governments have continued to research the potential harm that can be caused by spoofing attacks and are learning more about how to defend themselves from them.

The Tesla experiment was groundbreaking because it was the first time that a level 2.5 autonomous vehicle was exposed to a sophisticated GPS spoofing attack and its behavior recorded.

We chose Tesla’s Model 3 because it had the most sophisticated advanced driver assistance system (ADAS) at the time, called Navigate on Autopilot (abbreviated NOA or Autopilot), which uses GPS to make several driving decisions. However, this experiment exposed several cybersecurity issues potentially affecting all vehicles relying on GPS as part of their sensor fusion for autonomous decision making.

NOA makes lane changes and takes interchange exits once a destination is determined, without requiring any confirmation by the driver. Its several other features include autonomous deceleration and acceleration according to the speed limit, autonomous lane changing, and adaptive cruise control.

These features use a variety of sensors, including cameras, radar, speedometers and more. The researchers wanted to test the extent to which the Model 3 relied on its GNSS receiver to make these driving decisions and how it behaved when receiving contradicting information from its GNSS receiver and its other sensors.

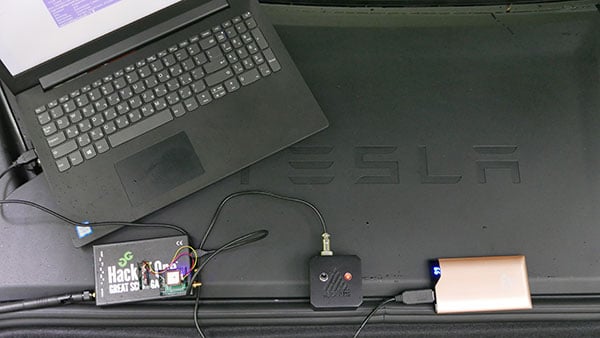

The researchers used hardware and software purchased online to mimic the tools potential hackers would use. The experiment involved two software-defined radio (SDR) devices purchased online, one to spoof GPS and one to jam all other constellations, connected to an external antenna to simulate an external attack. The software used to simulate the GPS signal was downloaded from an online source, available for free.

The test included three scenarios the researchers assumed would involve usage of GNSS, each one using a different spoofing pattern:

Scenario 1. Exiting the highway at the wrong location

Scenario 2. Enforcing an incorrect speed limit

Scenario 3. Turning into incoming traffic

Scenario 1: Exiting the Highway at the Wrong Location

The car was driving normally at a constant speed of 95 KPH with NOA enabled. The destination determined for this ride was a town nearby and the car designated a certain interchange as the destination for an autonomous exit maneuver. The experiment began 2.5 km before the vehicle reached that interchange; however, the researchers’ fake GPS signal resulted in coordinates of a location on the same highway but only 150 m before the exit.

As soon as its GNSS receiver was spoofed, the car assumed that it had reached the correct exit and began to maneuver to the right, activating the blinker, slowing down, turning the wheel, and crossing a dotted white line to its right side, exiting to an emergency pit-stop, confusing it with the exit 2.5 km ahead.

To be clear, this would not have happened at any location along the highway, because sensor fusion with the radar and the camera enables the car to avoid physical obstacles and ensures that it does not cross a solid white line that makes a turn illegal.

The spoofing attack succeeded, in that it enabled the attacker to remotely manipulate the car’s sensor fusion and make it exit the highway at the wrong location.

Scenario 2: Enforcing an Incorrect Speed Limit

The car was driving to a random city far away on a highway, at a constant speed of 90 KPH, which was 10 KPH below the highway’s speed limit, with NOA enabled. The researchers generated a fake GPS signal, with the coordinates of a nearby town road that has a speed limit of 33 KPH. Shortly thereafter, the vehicle assumed the speed limit had just changed to 33 KPH and instantly began decelerating. Each time the driver attempted to accelerate using the gas pedal, as soon as he lifted his foot off the pedal the car engaged in heavy braking to quickly decelerate back to 33 KPH.

To be clear, this would not have happened if NOA had been turned off. The cruise mode can be disabled by either using the touch screen or by pressing the brakes, which would allow the driver to regain full manual control over the vehicle’s speed.

Again, the spoofing attack succeeded, in that it allowed the attacker to remotely manipulate the car’s speed and made it enforce a speed limit much lower than the actual one on the highway.

Scenario 3: Turning into Incoming Traffic

The car was being driven manually on a two-lane road with one lane in each direction, the type of road on which NOA cannot be used. The researchers generated a fake GPS signal, with coordinates of a nearby three-lane highway, with all lanes in the same direction. Furthermore, the spoofed location was 150 m from a designated exit that the vehicle’s navigation system was programmed to take, requiring a left turn.

Shortly after the car’s GNSS receiver was spoofed, the vehicle assumed it was on a highway and engaged NOA. Next, it triggered the exit maneuver, which began with activating the left blinker, followed by turning the wheel to the left. The driver had to quickly grab the wheel and manually drive the car back to its lane to avoid a collision with oncoming traffic.

To be clear, this kind of scenario would not be possible without the driver enabling the NOA. Once a Tesla driver enables NOA, it automatically turns on once the vehicle is on the highway with a set destination. This is why the researchers assumed that NOA would be turned on by default, and as long as NOA is activated, the vehicle is susceptible to the attacks mentioned in the experiment.

Once again, the spoofing attack was successful in that it enabled the attacker to remotely steer the vehicle into the opposing lane, placing it on a direct collision course with oncoming traffic. Out of the three scenarios described, this one proved that GNSS spoofing can endanger lives.

GPS Cybersecurity for Automotive Applications

The NOA system in the Tesla Model 3, being an ADAS, allows drivers to rely on the car and its sensors for basic driving functions. Therefore, it enables drivers to briefly take their hands off the wheel and reduces the number of actions they are required to take. Nevertheless, drivers are still required to be fully attentive to the road so that they can take control of the vehicle at any time.

However, since this spoofing attack had such a sudden and instant impact on the car’s driving behavior, a driver who is not fully attentive and aware would not be prepared to quickly take control and prevent an accident. By the time the driver notices that something is wrong and reacts, it might be too late to prevent an accident. Already drivers have been found sleeping at the wheel, driving under the influence of alcohol, and doing other inappropriate tasks with NOA engaged.

Furthermore, this situation assumes a level 2.5 autonomous vehicle as was tested. But what happens in level 3 vehicles, in which driver engagement is limited, or level 4 and 5, in which driver response is non-existent? This research provides us with a glimpse into the crucial importance of sensor cybersecurity and particularly of GNSS cybersecurity.

The Tesla hack experiment and its results were eye-opening for the autonomous vehicles sector – the danger is real and rising as more and more vehicles are depending on GNSS technology as part of their sensors for assisted or automated driving. Up to 97% of new vehicles since 2019 incorporate GNSS receivers and most if not all are still vulnerable to the same spoofing attacks presented in this research.

In January 2021, the UN’s World Forum for Harmonization of Vehicle Regulations (WP.29) issued Regulation No. 155, which sets guidelines for cybersecurity in the automotive industry with the goal of addressing every possible cyber threat that it might encounter. Annex 5 of the regulation defines cyber attacks and states that in order to get approvals in the future vehicle manufacturers will need to provide solid evidence that their vehicles are sufficiently protected against them.

Among the cyber threats mentioned in the Annex is spoofing of data received by the vehicle — both sybil spoofing attacks and spoofing of messages. The Annex also lists the appropriate protection that vehicle manufacturers should implement and states that vehicle manufacturers will be required to provide evidence of the effectiveness of the mitigation measures they choose. These upcoming regulatory requirements can make the difference between life and death in situations caused by GNSS spoofing and ensure that only reliable and resilient positioning is used within vehicles, both today and in the future.

Please note: Tesla released a statement saying that it is “taking steps to introduce safeguards in the future which we believe will make our products more secure against these kinds of attacks.” Regulus Cyber researchers did not perform any further experiments with Tesla Model 3 since this research was published two years ago.

See the Tesla GPS spoofing experiment from the driver’s point of view:

Follow Us